I do not let my son outsource thinking to a machine.

He uses Claude Code to help write C++ in Unreal Engine. That part is fine. What changed is how often the model started driving instead of assisting. The prompt goes in. The code comes back. It compiles. It runs. He moves on. Somewhere in that loop the learning stopped and the shipping continued.

So we put a PR process in place. I review the code. I ask questions cold. He answers without AI. If he cannot explain it, it does not merge.

The Boundary That Matters

He is allowed to use AI to explain to him why something works. He is not allowed to hide behind it. That distinction is the entire point. Accountability matters. Owning code means understanding it, defending it, and fixing it when it breaks. That still requires learning how to program, not just how to prompt.

As a parent and a mentor, letting him slide past that would be irresponsible. I have no issue with him learning AI. Quite the opposite. But the first lesson is boundaries. Tools do not absolve responsibility. They amplify it.

If you cannot explain the code without the model open, the model wrote it. You just pressed enter.

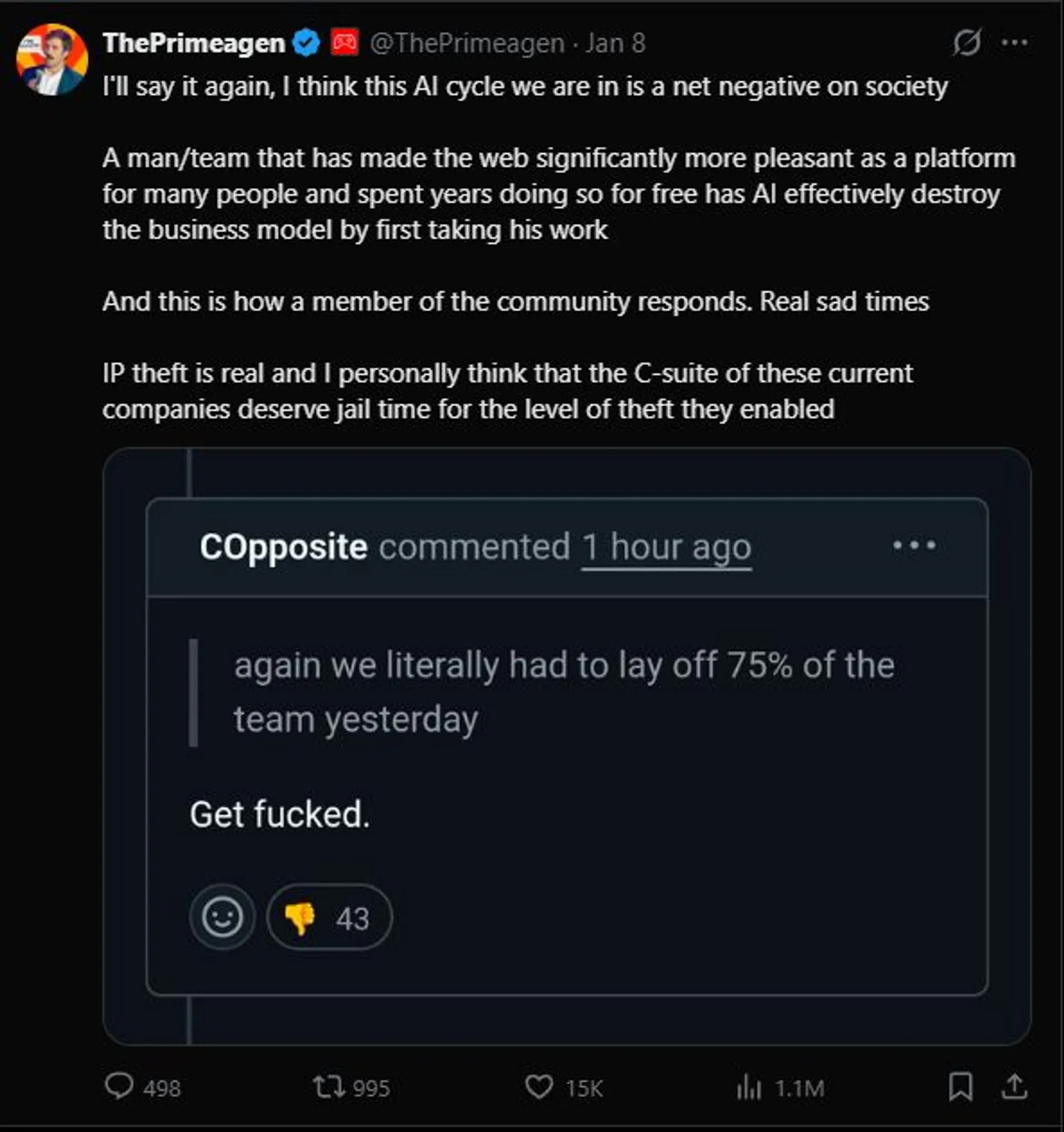

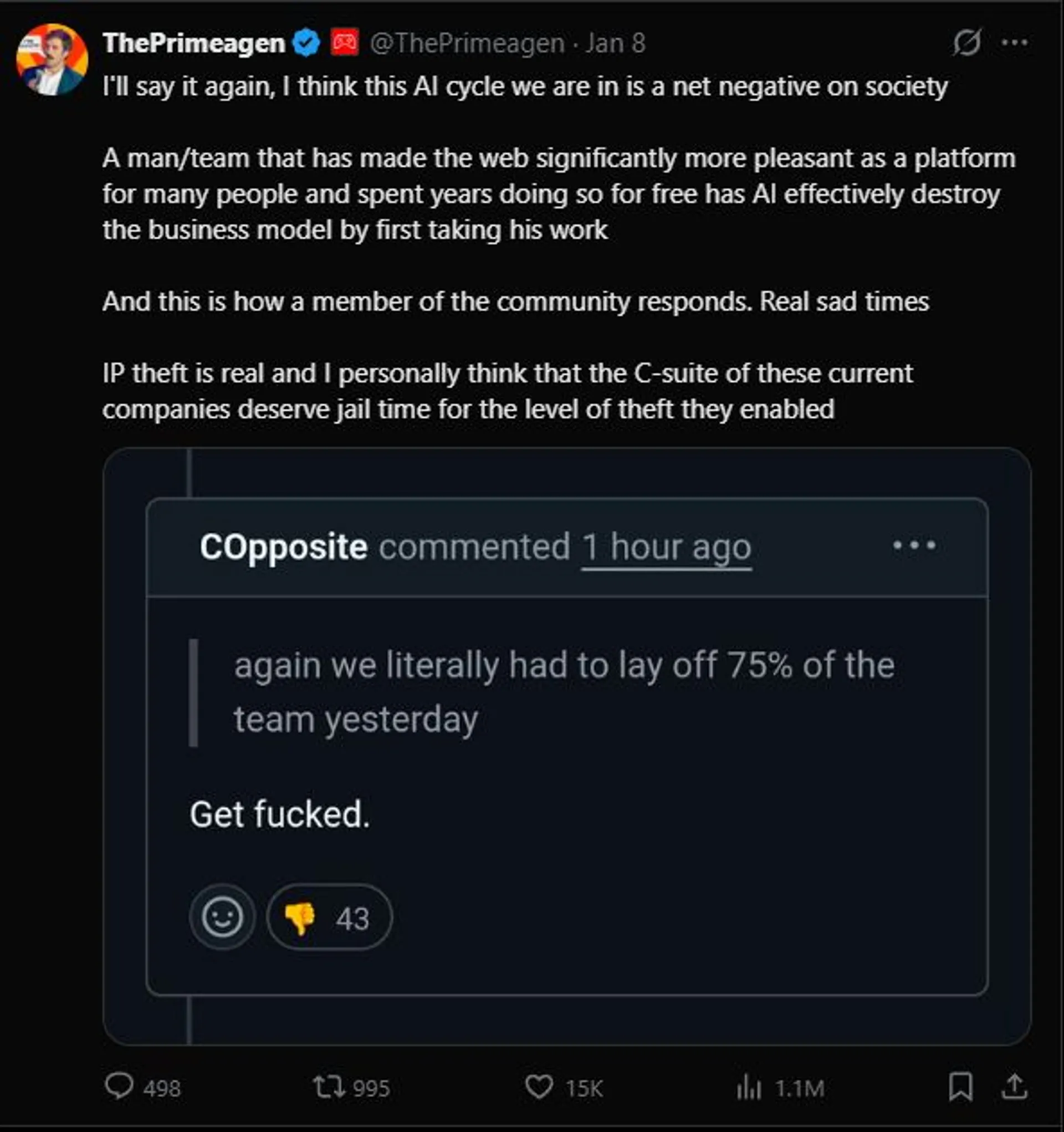

What worries me is how many people treat this as a painless step over moment in programming. As if skill transfer is automatic. As if understanding arrives by osmosis. I agree with ThePrimeagen that AI is a net negative for society. You can still use it. Both things can be true.

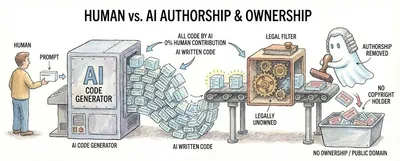

Scar Tissue Scales. Prompts Do Not.

I use AI every day. It scales three decades of hard won experience. It accelerates recall. It helps explore options I would have spent hours sketching on a whiteboard. But I am constantly pulling it back from bad abstractions, side quests, and quietly broken code. I can see those failures because I spent years writing bad code myself.

That scar tissue matters.

A junior using AI and a senior using AI produce output that looks identical on a screen. The difference is what happens when it breaks. The senior recognises the shape of the failure. The junior sees only that it stopped working. One debugs. The other prompts again.

The junior does not lack intelligence. They lack the archive of past mistakes that tells you where to look first. AI cannot build that archive for you. It can only help you search it faster once you have it.

The Cockpit Analogy

Modern planes can take off and land themselves. The software is extraordinary. Yet there are still two humans in the cockpit. Not because the software is dumb, but because responsibility cannot be automated away. Even if all they do is nod in agreement, that nod carries accountability. When the system fails, someone has to take the stick. That someone needs to know how to fly.

Use the tool. Learn the tool. But never surrender ownership.// The lesson

Code you cannot explain is not acceleration. It is tomorrow’s debt with interest compounding in silence.

// SENSOR_DATA_OVERLAY: FIELD_INTENSITY 0.92Hz

// "The design isn't just a shell; it's a sensory interface for the model's weights."